I am rather bad at the whole concept of relaxation. I say this as an introduction, because writing this post is my idea of taking a break from putting together the final presentation for my class on user testing.

The course has been interesting overall. I will readily admit that I don’t intend to make a career of user research, but I am glad I’ve had the experience. Research is, after all, critical to design and development both. We can try to stick to the whole “if we asked people what they wanted, they would’ve said ‘a faster horse’” thing, but, shockingly, we actually aren’t omniscient. We aren’t our users.

Throughout the course of the course (and now you can tell my brain is a bit fried, because I’m throwing in wordplay), we’ve used a few different techniques. My favorite so far has been card sorting: it’s easy to explain, quick to do, and works very well for figuring out a sensible way to organize a bunch of stuff.1

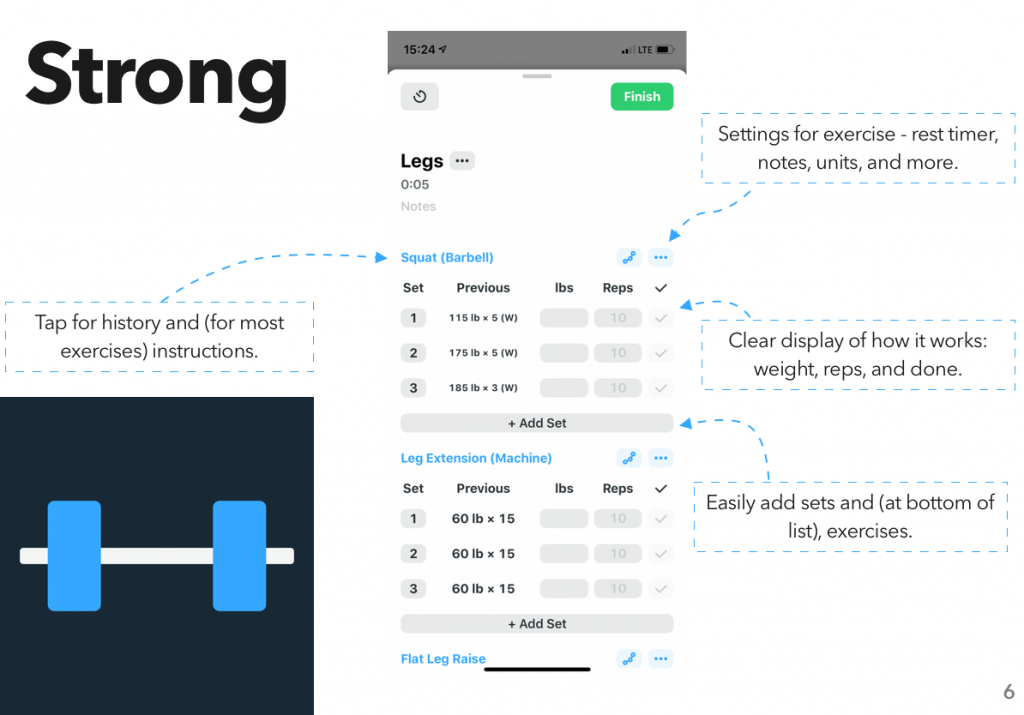

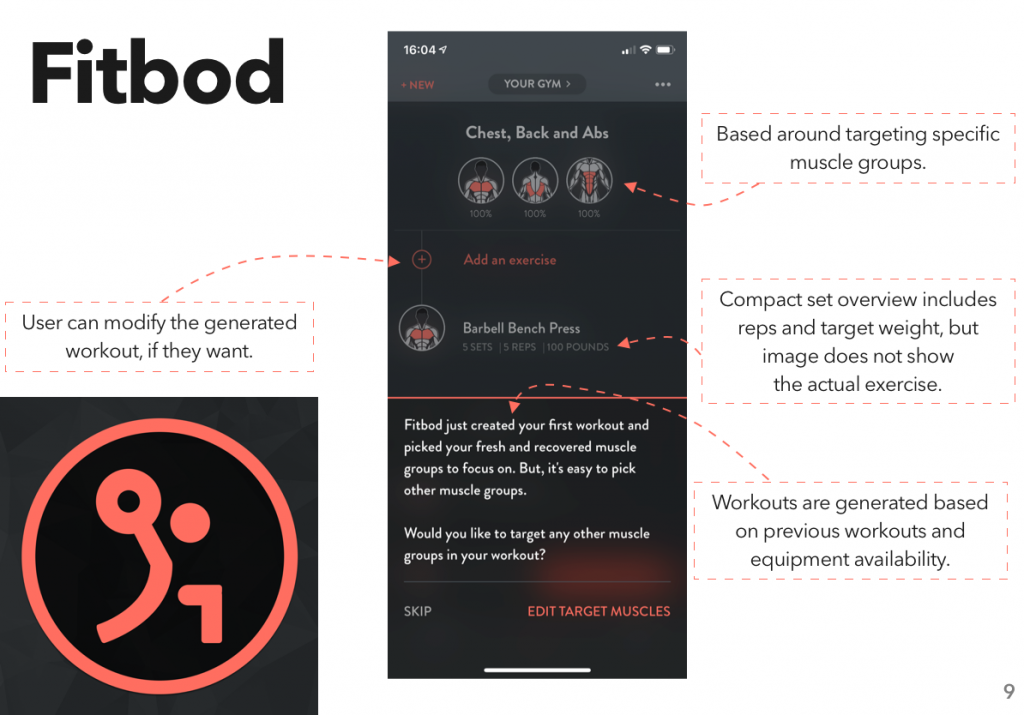

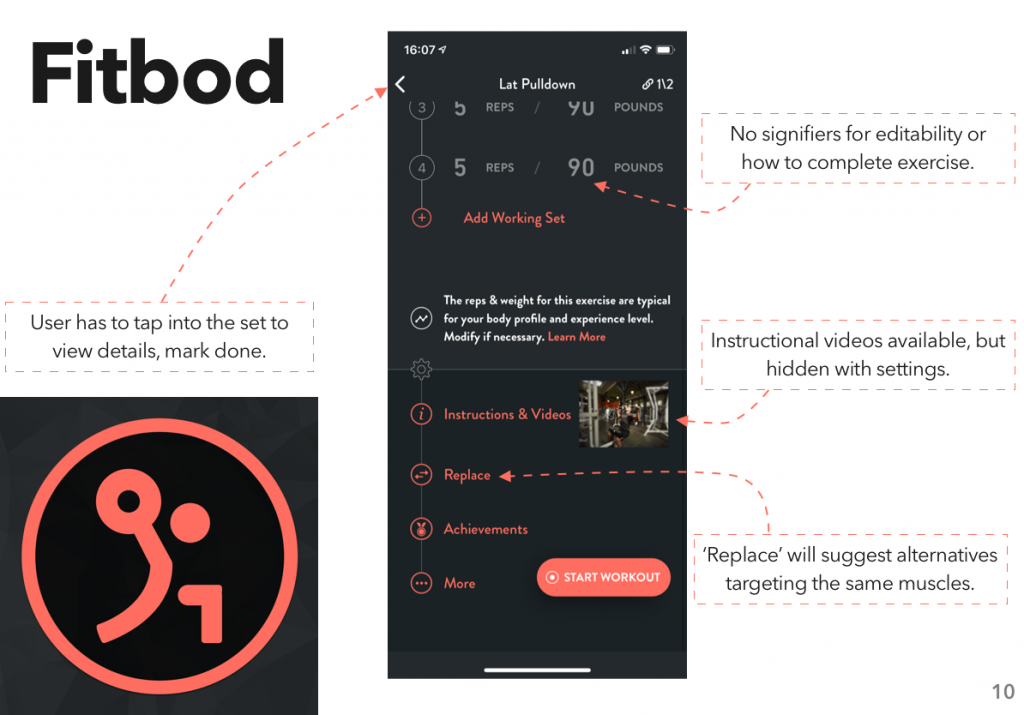

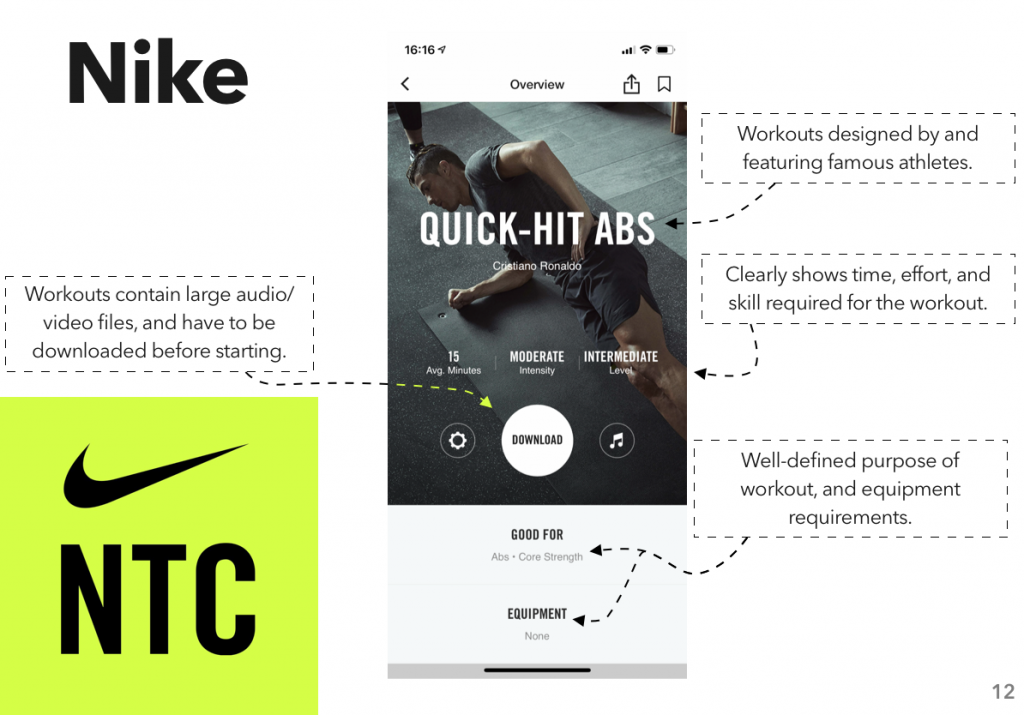

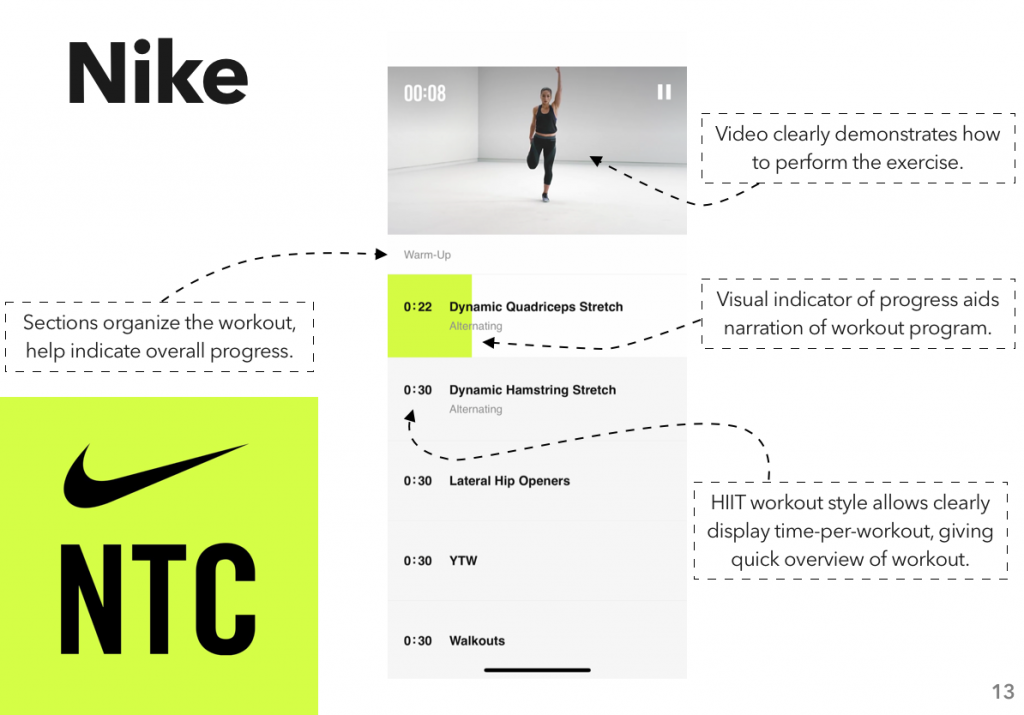

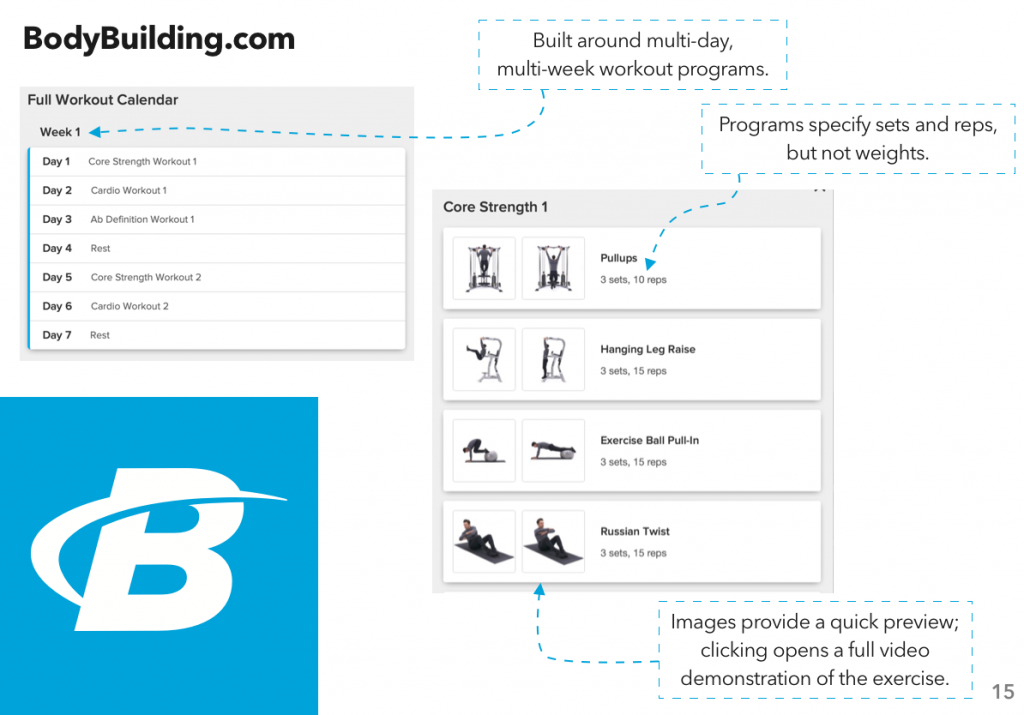

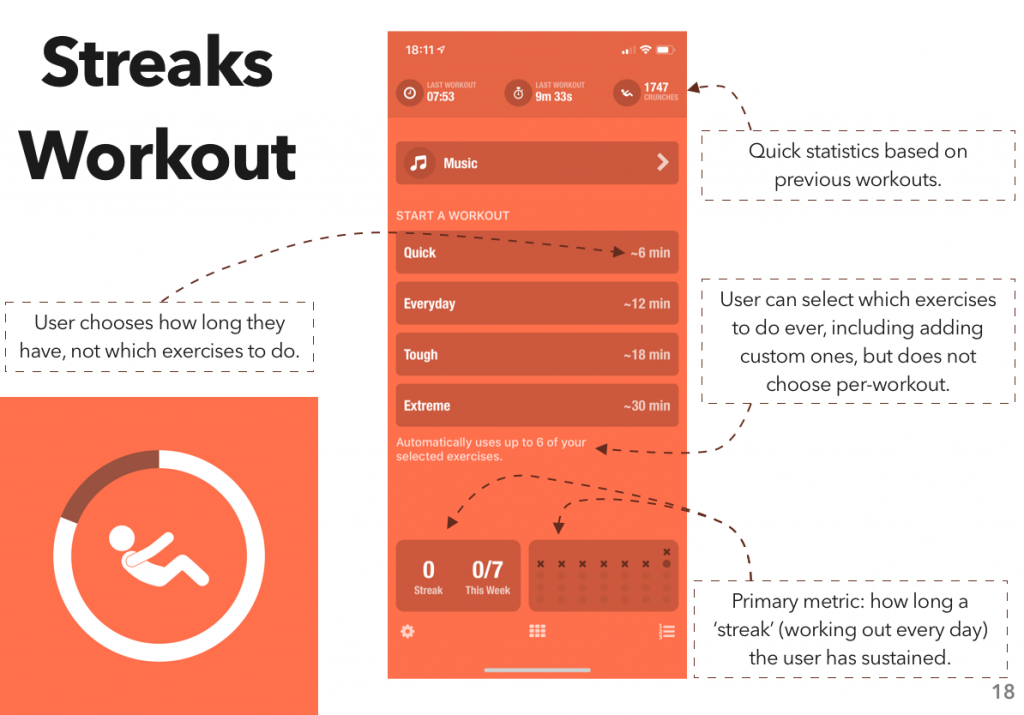

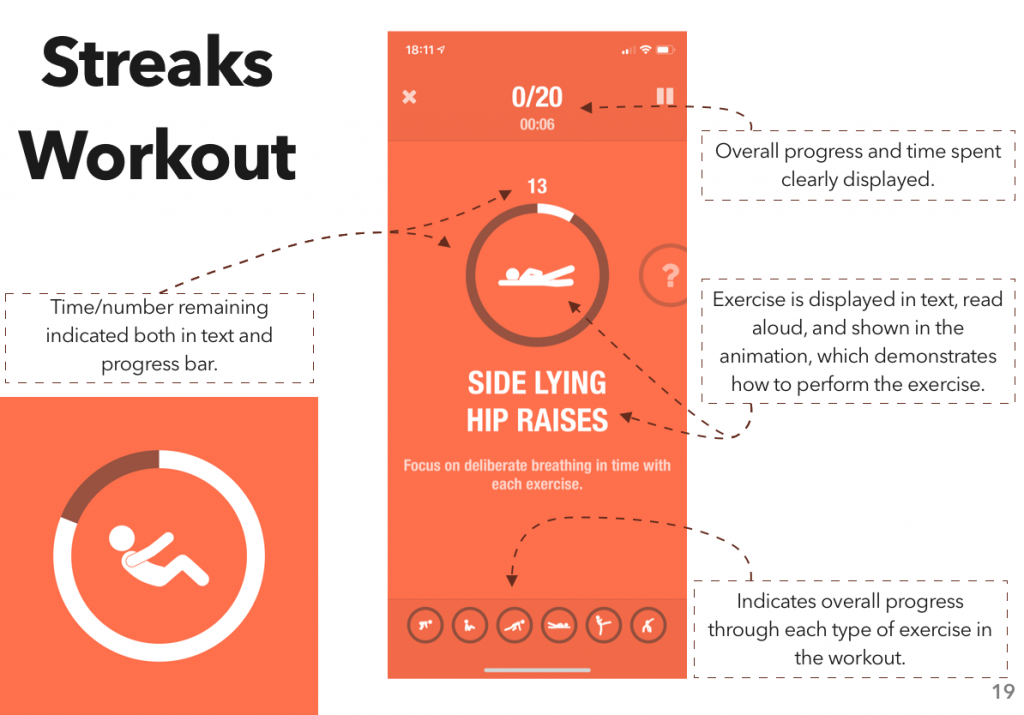

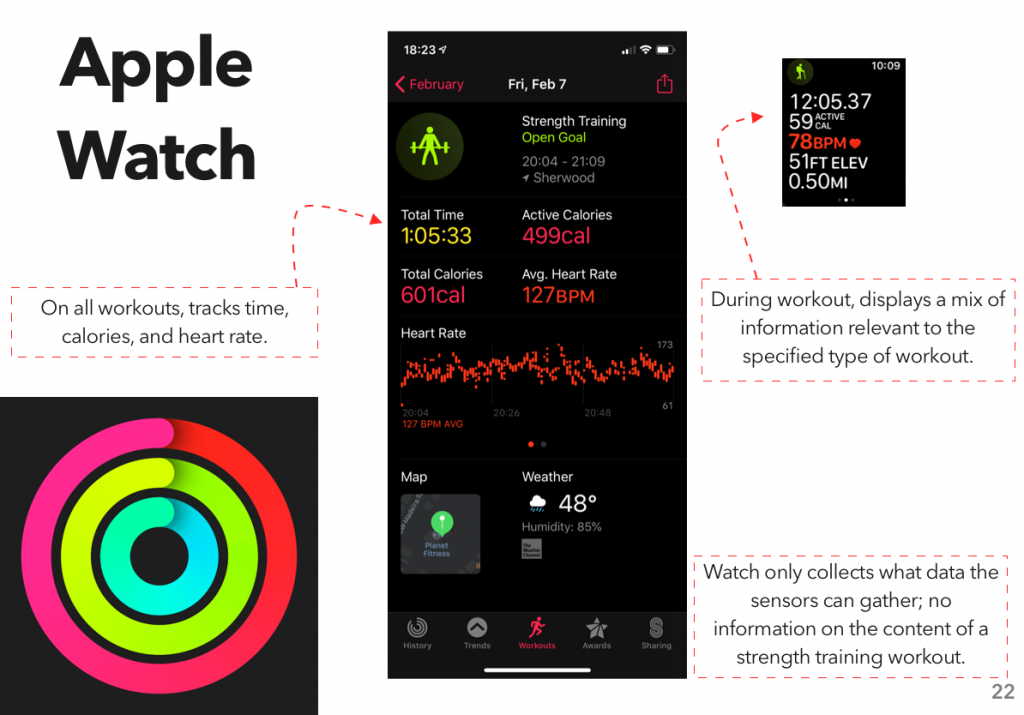

I also quite enjoyed the competitive analysis assignment—going through, as best as I could, the entire market for a specific category was a surprisingly fun challenge. It’s also immediately visible how useful it is, from a strategic perspective: at the end of that assignment, I closed out an open project I had where I’d been putting together sketches for a fitness application. While it would’ve been fun to build, it really wouldn’t have had anything truly unique to establish itself in the market, and I’m too much of a Broke Millennial to be able to devote that much time to ‘fun to build.’2

We also did some quantitative and qualitative user testing, which I found interesting, but also served as the main argument for my “I don’t have a career as a researcher ahead of me” stance. Interesting, yes; useful, when done right; surprisingly difficult to do right, absolutely.

The qualitative structure was a bit more forgiving than the quantitative. We used UserTesting.com, which has a well-polished interface for assembling a test, a slightly less well-polished interface for going through a test, and a checkout page that leaked memory at an astonishing rate.34 Those complaints aside, the actual data I got was very helpful—the biggest issue I had with it was because of my own mistake, and the more open nature of the test meant that even that failure helped me sort out the answer to a different question I had.5

Going into the class, I thought I was going to like the quantitative testing more—I majored in computer science, and threw in a minor in math at the end, I’m a Numbers Guy. In execution, though, I wasn’t a fan. Part of this is probably due to the software we used: I was utterly unimpressed with Loop11. Their test assembly and reporting interface felt very Web 2.0, which I always find a bit alarming for an online-only business, and while the test-taking interface was a bit better (hello, Material Design 1.0!) it was unusable in Safari and a privacy disaster waiting to happen everywhere else.67 And for all that trouble, the data I got wasn’t all that useful. Though, a caveat to that: quantitative testing is more appropriate for summative testing, verifying that your completed design/product works as it’s supposed to, and I won’t be in a position to actually do that sort of testing for, oh, months at the earliest. Certainly not in time to use the results for this class, so I misused the technique a little bit in order to have something to work with.

As I said at the start, though I don’t intend to make a career of this, I’m glad to have taken the class—at very least, it means I’ll have that much more respect for the work my colleagues who do make a career of it are doing. And I sincerely doubt that this will be the last time that I do some testing myself; it’s important to do, and I quite like having the data to validate my designs.

- You can do it with actual cards, if you have time and are actually in the same place as your participants. If not, you can use online tools. We used OptimalSort, which is available as part of the OptimalWorkshop suite of tools. If that’s the only thing you’re going to do with it, I honestly can’t recommend it, because while it’s a good tool, it is not, on its own, worth the price. (Having seen their pricing structure, am I considering breaking into that market? Only vaguely.) ↩

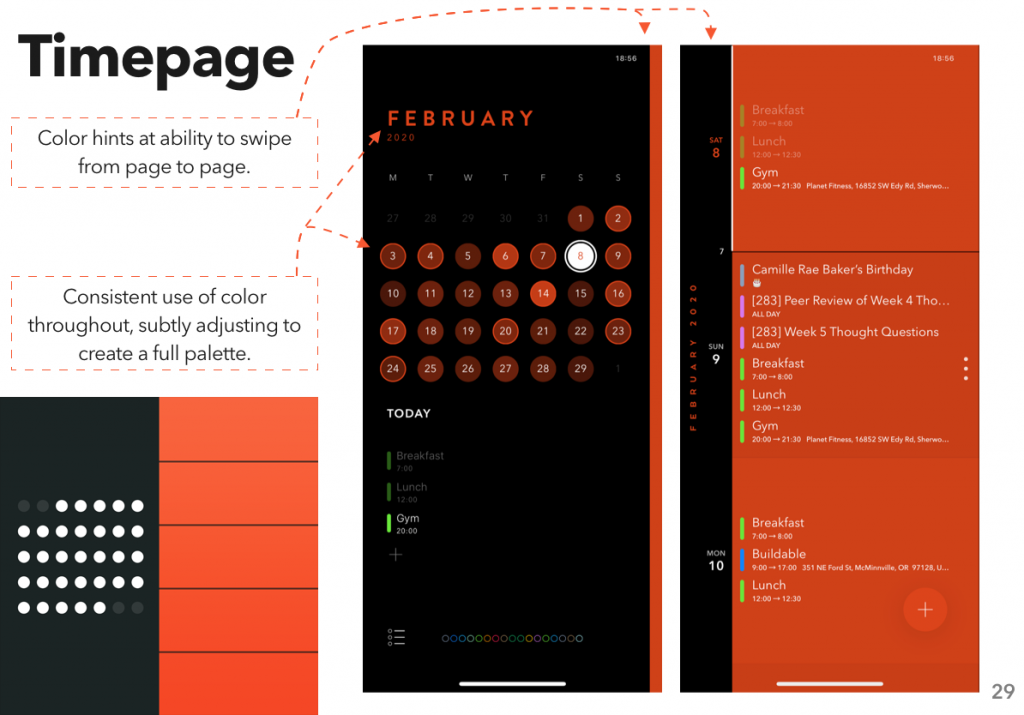

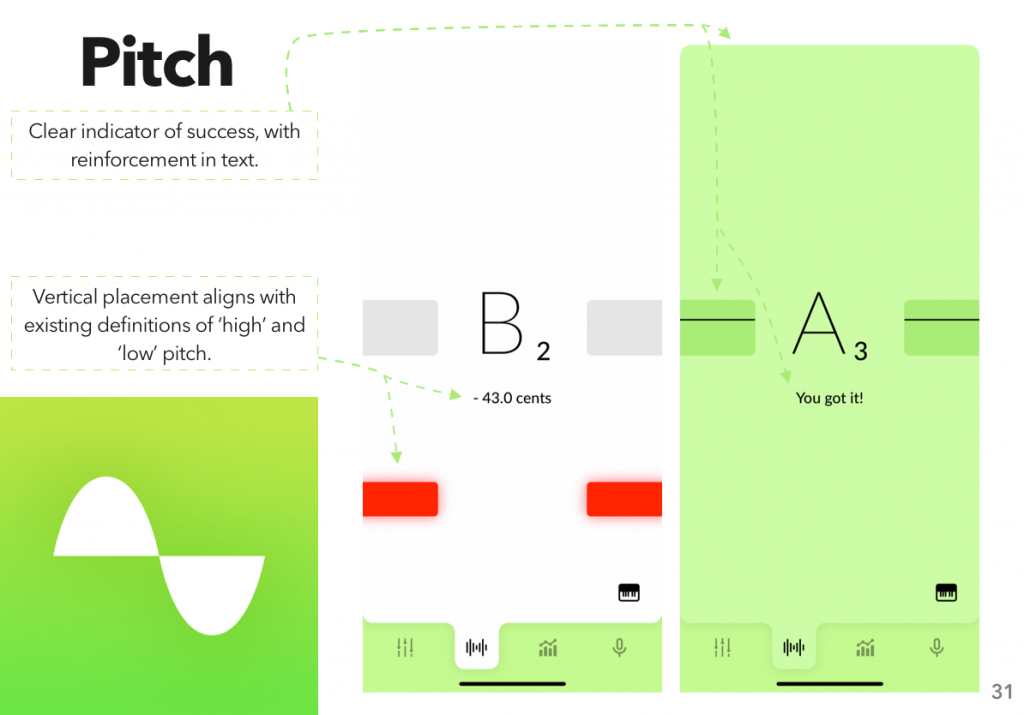

- But hey, while we’re on the topic, check out some of my apps! ↩

- I left the tab alone for ten minutes and came back to 30,000 error messages in Safari’s console and about 2 gigabytes of RAM consumed. On their checkout page. C’mon, folks, that’s just embarrassing. ↩

- To be fair, I’ve got a pile of content blockers enabled, and at a quick glance quite a few of the error messages where a result of that. On the other hand, you shouldn’t be throwing that many little privacy-violating scripts at people to start with, and you definitely shouldn’t be doing it so badly. ↩

- I’m being intentionally vague about what I was actually testing, because it’s something I may yet fully develop, and I don’t talk about things until they’re good and ready. ↩

- I suspect Safari’s refusal to work with it was related to the latter concern; I didn’t dig into what was going wrong, just threw my hands in the air in disgust and installed Firefox. ↩

- As far as I can tell from some cursory inspection of the functionality of their ‘no code’ feature, it works by having your testers install a browser extension that… executes a man-in-the-middle attack, of sorts, on every page they view. When asking people to do the test, I told them to install the extension, do the test, and then immediately uninstall the extension—and that because I didn’t want to try walking people through installing a completely separate browser to do it in, like I did. ↩