I’m writing this a bit after WWDC has ended, and publishing it a bit further after that, so pardon the tardiness as I share my thoughts, in no particular order:

- As a user, I am quite excited by the new functionality in FaceTime, and the new Focus system for managing notifications. The system of tiered notifications looks great, and I’m excited for the, oh, 15 minutes most people will have between ‘installing iOS 15’ and ‘an app first abusing the notification level to send them spam.’1 In iOS 16, can we get a “report notification as spam” button that immediately flags the app for App Review to yell at?

- As both a developer and user, I was immensely excited to see the ManagedSettings and ManagedSettingsUI frameworks – I really like the concept of Screen Time for externalized self-control, and being able to use custom implementations or build my own seemed like a dream.2 Unfortunately, all those dreams were immediately crushed by the reveal that this all requires FamilyControls, so not only can I not do anything interesting with it as a developer, I can’t even use it myself. Because, as we all know, the only use case for “my phone won’t let me use it to waste time” is “parents managing their children.”

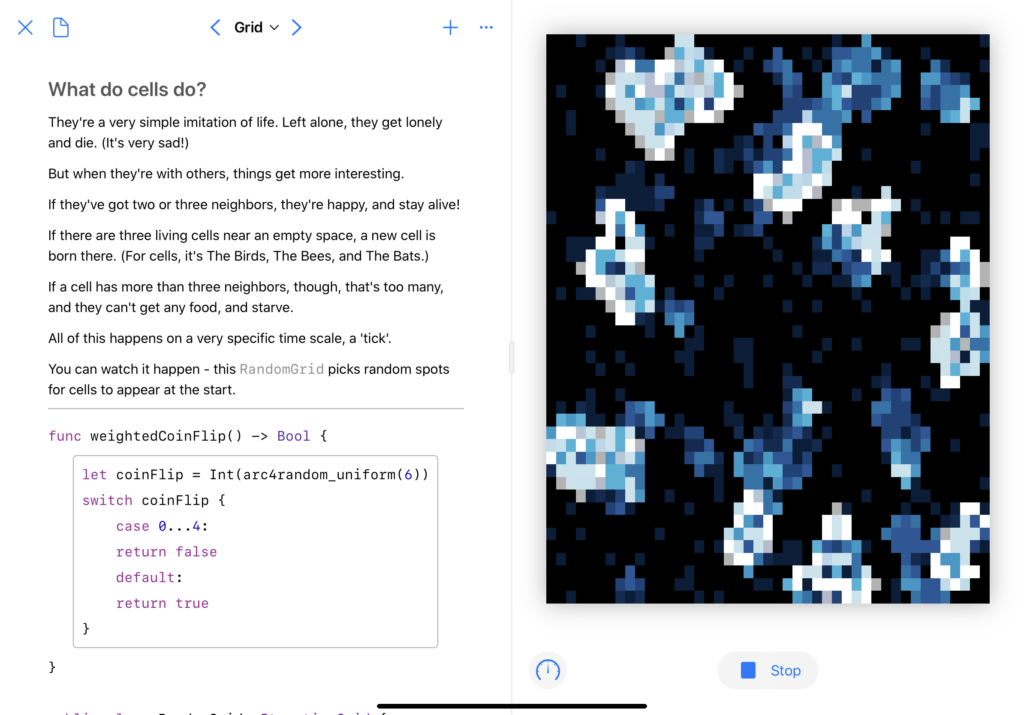

- Swift’s implementation of async/await looks great, and the stuff Apple’s doing with

@MainActorseems to have met the goal of “the language eliminates an entire class of error” that initially gave us optionals, so I’m quite happy about that. I hope Vapor updates to async/await soon, I’d love to untangle some of myflatMapchains. - The new Human Interface Guidelines section on Inclusion is very well-written and hits on some important points.

- I am incredibly excited about the virtual KVM thing coming to macOS and iPadOS – my desk setup features two monitors for macOS, and an iPad perpetually on the side, and the idea of being able to control the iPad without taking my hands off the main keyboard and mouse is very exciting.3

- Safari on iOS got support for web extensions, which is neat. I also like that they’re pulling things down toward the bottom of the screen, should be more reachable.

- Safari on macOS might finally do something that Mozilla never managed to do: convince me to switch to Firefox. I sincerely hope this is the peak of “aesthetics over functionality” in Apple’s design, and that it gets better from here, because this is a mess: lower contrast text, the core UI element moves around all the time so you can’t have any muscle memory, and they’ve buried every single button behind a ‘more’ menu.

- SwiftUI got lots of little updates, but the thing that I may be most excited about is the simple quality of life improvements as a result of SE-299 –

.listStyle(.insetGrouped)is much easier to type than.listStyle(InsetGroupedListStyle()), especially considering that Xcode can actually suggest.insetGrouped.

- In my case, it’ll probably be a couple days, and then Apple Music will punch through a Focus mode to notify me that Billie Eilish has a new single or something. ↩

- I already have a name for the app I want to build using these API. My first feature would just be “the existing Screen Time system, but it actually knows how time works.” Something like 90% of the time that I hit ‘one more minute’ I’m instead given a couple seconds. Apple, I know that time is hard, but it isn’t that hard. ↩

- Why the iPad? Because iPadOS is sandboxed, which limits the sort of bugs that Teams can introduce and evil that Zoom can. Sure, they both have macOS apps – but you shouldn’t install either of them. ↩